As a QA professional, one of the most important aspects of my job is to provide clear, concise, and actionable evidence of what went wrong during test execution. In the early days of test automation, this was limited to screenshots. Screenshots captured what was on the screen when a failure occurred—useful, but often lacking critical context.

Over time, I realized that screenshots alone didn’t tell the full story. They didn’t capture the steps leading up to a failure, the system state, or the underlying network requests that could help diagnose the issue. This led me to a shift in how we handled test evidence—a shift from screenshots to test videos.

In this blog, I’ll walk you through how this evolution took place, why it was a game-changer for our testing process, and how video-based evidence enhanced our ability to debug and collaborate across teams.

The Limitation of Screenshots in Test Evidence

Screenshots were the standard method for capturing failure points in tests. But as helpful as they were, they came with significant limitations:

1. Lack of Context

A screenshot could show you an error message or a UI element that failed, but it didn’t explain how the test arrived at that point. Without context, it was hard to determine what went wrong before the failure.

For example, a screenshot might show a button that failed to click, but it wouldn’t show if the page loaded correctly or whether there were issues with the backend before the button failed.

2. No Visibility Into Test Steps

Test scripts often involve multiple steps: clicking on buttons, filling forms, making API requests, etc. Screenshots only captured the final state, making it hard to reproduce the exact flow of actions that led to the failure.

3. Inconsistent Quality

Not all failures could be captured in a screenshot. For example, if an issue only occurred intermittently or was due to a race condition, screenshots might not provide the necessary information to diagnose the problem.

4. Limited Debugging Value

While screenshots are great for visual validation, they’re not as effective for debugging deeper issues related to network requests, server errors, or data inconsistencies. This meant that even though we had evidence of a failure, we still often couldn’t pinpoint the cause without additional investigation.

Dive Deeper: automation testing interview questions

The Shift to Test Videos: What Changed?

The move from screenshots to test videos wasn’t just a technological upgrade—it was a mindset shift. Here’s why switching to videos made such a significant impact:

1. Complete Context and Step-by-Step Visibility

Test videos provided a complete, continuous view of the entire test scenario. Instead of seeing just the point of failure, we could now watch the test run from start to finish. This gave us visibility into every action, decision, and page transition.

For example, if a test failed at the login step, we could watch the video to see if the form was filled correctly, whether there were issues with form validation, or if there were network problems before the failure.

2. Easier Reproduction of Failures

Videos gave us the ability to replay the exact sequence of events leading up to a failure. This made reproducing issues far easier, especially when collaborating with developers. Instead of relying on testers to describe what happened, the video provided an objective, clear timeline of actions and outcomes.

3. Rich Debugging Information

Unlike screenshots, videos allowed us to see the entire environment—including the UI, interactions, and responses from the server. This added value when troubleshooting issues that were more complex than just a UI failure. We could now see if the network requests were correct, whether the backend was responding properly, and how the frontend handled those responses.

Videos also allowed us to capture things like delays, race conditions, and intermittent issues that wouldn’t be visible in a screenshot.

4. Improved Communication with Developers

Having test videos to share, using motion-based playback similar to video animation, meant that we could demonstrate failures more effectively and help developers understand issues faster.

5. Better Documentation for Test Evidence

Videos provided a more comprehensive record of what occurred during testing. When issues were logged in our defect management system, we could attach both the test video and screenshots for clarity. This made it easier to track issues over time and ensured that our evidence was always reproducible.

How We Integrated Test Videos Into Our Workflow

The transition from screenshots to videos wasn’t instant—it required a few key steps to integrate test videos into our workflow. Here’s how we made it happen:

1. Selecting the Right Tool

We chose to integrate video recording into our test automation framework. Tools like Selenium, Cypress, and Playwright all offered ways to capture video during test execution. For instance, Cypress provides built-in video recording that automatically records every test run, while in Selenium, we used third-party libraries and browser driver capabilities to capture screen activity.

2. Configuring Video Capture for Every Test

We set up our test framework to automatically capture a video for every test run in both local and CI/CD environments. The video file was automatically stored along with the test logs and screenshots. This ensured that every test execution came with full visual evidence of the steps taken.

3. Automating Test Video Uploads

To make sharing and collaboration easier, we automated the process of uploading videos to our test management platform. Once the tests completed, the videos were automatically attached to the test case results, allowing testers, developers, and managers to review the footage directly within the system.

4. AnalyzingVideos Alongside Logs and Screenshots

We also integrated video analysis into our test reports. After each test run, videos were displayed alongside detailed logs and screenshots. This provided a rich, multi-layered view of test results, which was especially helpful when analyzing complex failures.

The Impact of Test Videos on Our QA Process

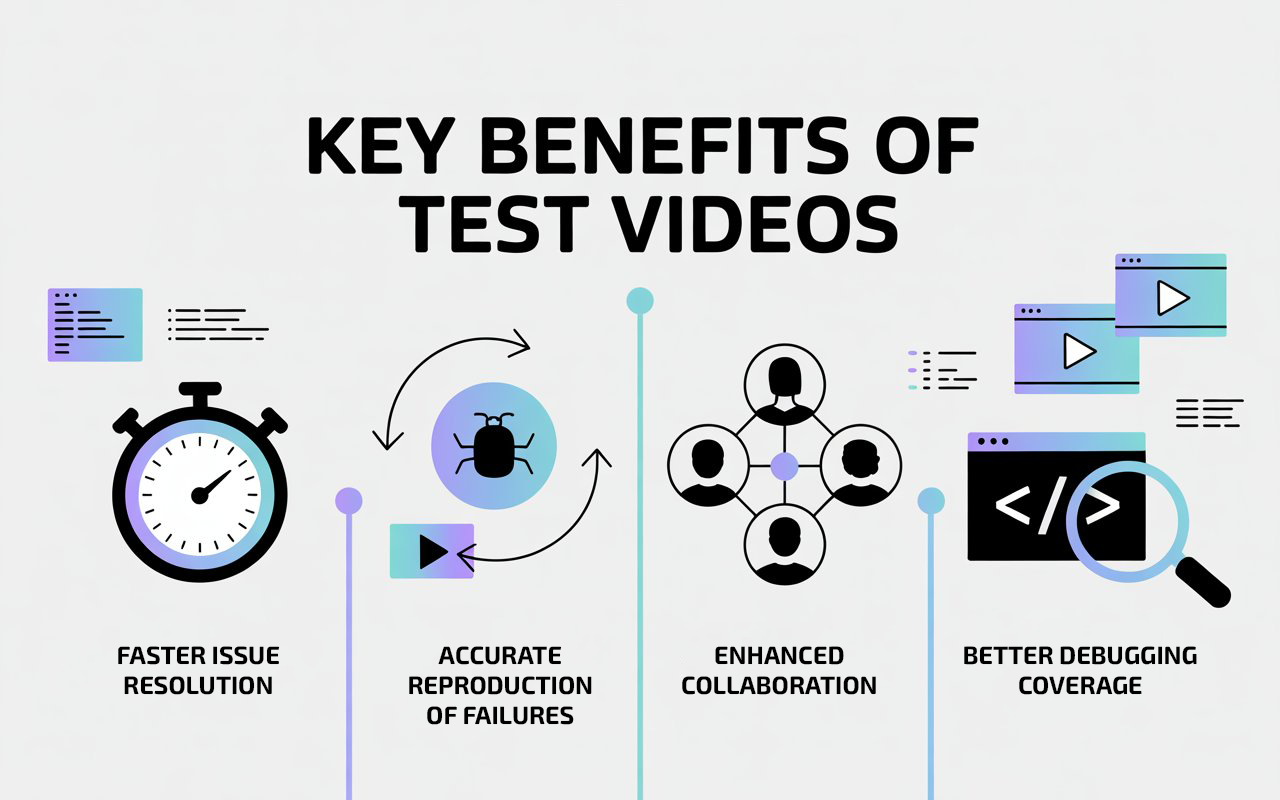

Since making the shift to test videos, we’ve seen several significant benefits:

1. Faster Issue Resolution

With videos, issues could be understood and addressed faster. Developers no longer had to ask “what happened before the failure?”—they could watch the entire test unfold and immediately see where things went wrong.

2. More Accurate Reproduction of Failures

Having a video allowed us to reproduce issues more accurately, reducing the back-and-forth between teams. Instead of testers trying to explain what happened, the video spoke for itself.

3. Enhanced Collaboration Across Teams

Test videos improved communication with developers and other stakeholders. Videos eliminated the guesswork and allowed for more focused discussions about what needed to be fixed.

4. Better Coverage and Debugging

We were able to debug issues much more effectively. Videos offered a full picture of how the test ran, including all interactions, network requests, and visual changes. This made debugging far more efficient.

Other Useful Guides: playwright interview questions

Conclusion: The Future of QA Evidence

The move from screenshots to test videos revolutionized how we approach test evidence. While screenshots still serve as a quick and easy way to capture failures, test videos have become our go-to tool for debugging, collaboration, and communicating with developers. They provide context, visibility, and clarity that screenshots simply cannot match.

For QA professionals looking to improve their testing workflow, integrating test videos into the process is a game-changer. As testing becomes more complex, tools like videos provide the detailed insights necessary to identify, debug, and resolve issues faster—ultimately helping us deliver higher-quality software in less time.

FAQs

Q1. Why are screenshots not enough for QA test evidence?

Screenshots show only the final state and lack context. They do not capture step-by-step interactions, network activity, or UI behavior leading up to a failure.

Q2. How do test videos improve debugging in QA?

Test videos provide complete playback of actions, UI states, and timing, making it easier to reproduce issues, identify race conditions, and analyze where exactly the failure occurred.

Q3. Which test automation tools support video recording?

Frameworks like Cypress, Playwright, and Selenium (with extensions) support video capture during test execution, allowing full execution playback.

Q4. How do test videos help developers during issue resolution?

Videos eliminate guesswork by visually showing what happened. Developers can watch the failure sequence directly, speeding up collaboration and reducing back-and-forth clarification.

We Also Provide Training In:

- Advanced Selenium Training

- Playwright Training

- Gen AI Training

- AWS Training

- REST API Training

- Full Stack Training

- Appium Training

- DevOps Training

- JMeter Performance Training

Author’s Bio:

Content Writer at Testleaf, specializing in SEO-driven content for test automation, software development, and cybersecurity. I turn complex technical topics into clear, engaging stories that educate, inspire, and drive digital transformation.

Ezhirkadhir Raja

Content Writer – Testleaf