In 2026, QA engineers are no longer just writing test cases and executing regression suites. They are designing prompts, validating AI-generated scripts, reviewing model outputs, and working alongside intelligent agents that assist in defect prediction, test optimization, and log analysis.

The real question today is not:

“Should QA engineers use generative AI?”

The real question is:

Which generative AI model is reliable, scalable, and safe enough for production-grade testing?

While many blogs list popular AI models, very few evaluate them from a QA engineering perspective. This article goes deeper — comparing the top generative AI models of 2026 specifically for testing workflows, automation reliability, and engineering precision.

What are the best generative AI models for QA engineers in 2026?

The top models include GPT-4o/5, Gemini, Claude, Copilot, LLaMA, Mistral, and Grok. Each serves different QA needs like automation, log analysis, and UI validation.

The Rise of AI-Augmented QA

Industry research shows accelerated AI adoption in engineering:

- McKinsey reports that AI-assisted development can improve productivity by 20–45% depending on task complexity.

- Gartner predicts AI augmentation will be embedded into most enterprise software workflows by 2026.

- According to Stack Overflow Developer Survey, AI-assisted coding adoption is rapidly increasing among QA engineers.

- Developer surveys show increasing adoption of AI coding assistants in daily engineering tasks.

However, productivity gains alone are not enough for QA.

Testing demands:

- Deterministic validation

- Risk awareness

- Repeatability

- Traceability

This means QA engineers must evaluate AI models differently from marketers, content creators, or business analysts.

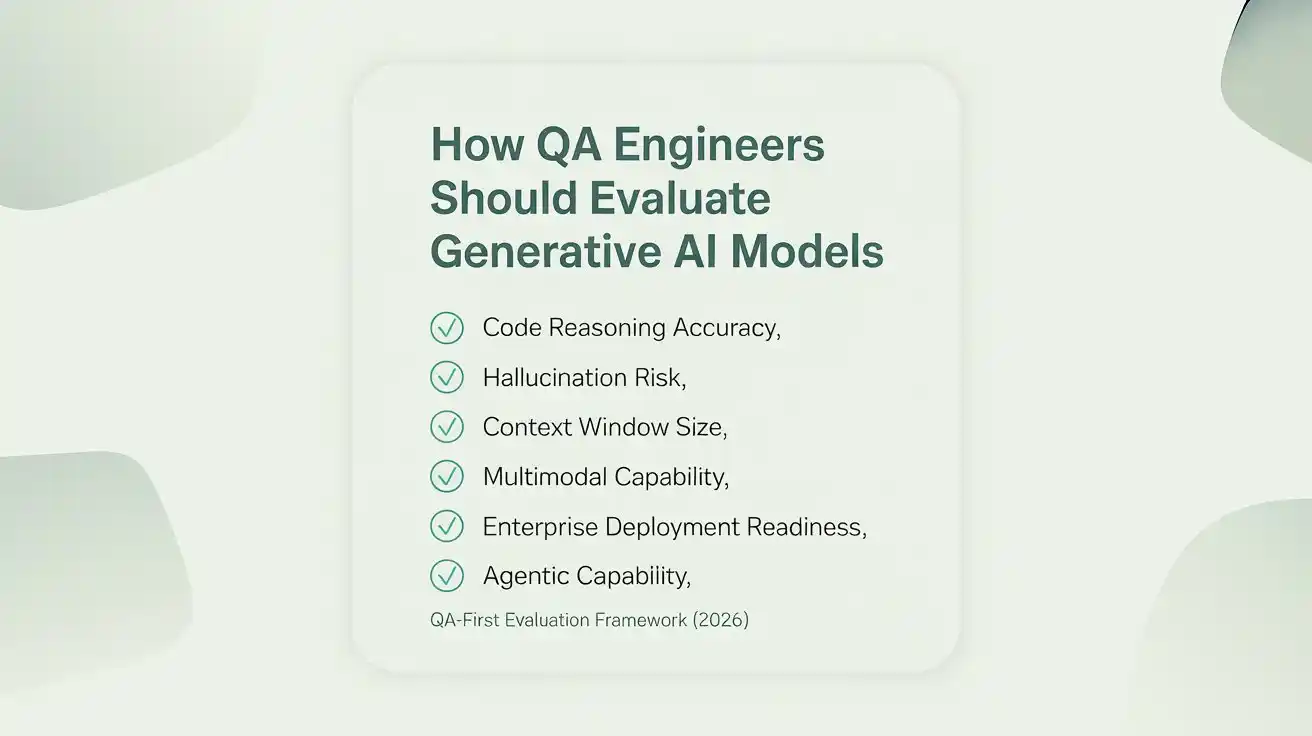

How QA Engineers Should Evaluate Generative AI Models

Before comparing models, here is a structured evaluation framework tailored for QA professionals:

1. Code Reasoning Accuracy

Does the model generate syntactically correct and logically sound automation scripts?

2. Hallucination Risk

Does it confidently produce incorrect locators, APIs, or assumptions?

3. Context Window Size

Can it process long test logs, large requirement documents, or multi-file frameworks?

4. Multimodal Capability

Can it interpret UI screenshots and visual flows?

5. Enterprise Deployment Readiness

Does it support secure APIs, on-prem deployment, or compliance needs?

6. Agentic Capability

Can it handle multi-step reasoning and structured workflows?

This evaluation model separates serious QA analysis from generic AI comparisons.

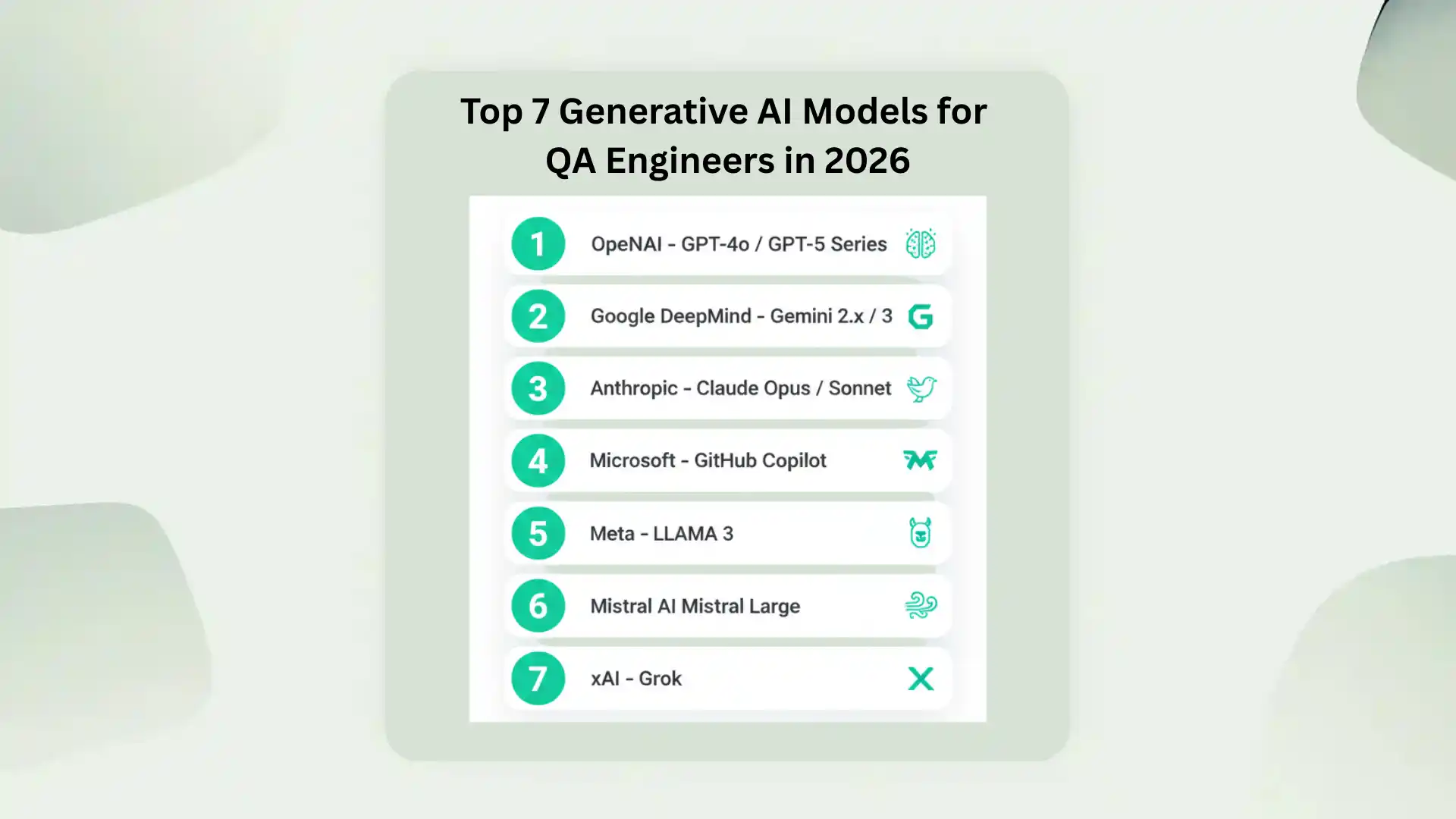

Top 7 Generative AI Models for QA Engineers in 2026

1. OpenAI – GPT-4o / GPT-5 Series

Best For

- Selenium & Playwright script generation

- API test case creation

- Converting user stories into structured test cases

- Defect log summarization

Strengths

- Strong reasoning across code and natural language

- High-quality automation scaffolding

- Fast iteration for test refinement

Limitations

- Can hallucinate element locators without context

- Requires structured prompting for stable outputs

Strategic Insight

Best used with a clear validation layer. It is a powerful assistant, not a replacement for QA judgment.

2. Google DeepMind – Gemini 2.x / 3

Best For

- Multimodal UI validation

- Requirement document parsing

- Screenshot-based test suggestions

Strengths

- Strong multimodal understanding

- Effective long-context processing

Limitations

- Automation script precision may vary

- Requires refinement for structured frameworks

Gemini is particularly useful when QA workflows include visual validation and documentation-heavy systems.

3. Anthropic – Claude Opus / Sonnet

Best For

- Analyzing long test reports

- Reviewing logs in regulated industries

- Risk-focused documentation analysis

Strengths

- Large context window

- Conservative reasoning style

Limitations

- Less aggressive in automation code generation

Claude performs well in analytical QA scenarios where reliability is critical.

4. Microsoft – GitHub Copilot

Best For

- Writing automation inside IDE

- Refactoring existing test suites

- Generating unit tests

Strengths

- Deep IDE integration

- Context-aware code suggestions

Limitations

- Limited beyond project scope

- Not optimized for long-form analytical tasks

Copilot enhances day-to-day automation productivity within development environments.

5. Meta – LLaMA 3

Best For

- On-prem enterprise deployment

- Security-sensitive environments

- Custom QA agents

Strengths

- Open-source flexibility

- Fine-tuning capability

Limitations

- Requires infrastructure setup

- Needs ML expertise

Ideal for enterprises building internal AI-powered QA ecosystems.

6. Mistral AI – Mistral Large

Best For

- Lightweight AI integrations

- Cost-sensitive automation pipelines

Strengths

- Efficient performance

- Flexible deployment options

Limitations

- Smaller ecosystem compared to larger providers

Suitable for teams experimenting with AI-driven testing without heavy infrastructure investment.

7. xAI – Grok

Best For

- Real-time debugging research

- Exploratory problem-solving

Strengths

- Fast conversational clarity

- Updated information access

Limitations

- Not optimized for structured automation frameworks

Useful for investigation and technical research, but not a primary automation engine.

Comparison Snapshot for QA Engineers

| Model | Code Generation | Log Analysis | Multimodal | Enterprise Ready | Best Use |

| GPT-4o/5 | High | High | Moderate | Strong API | Automation + QA workflows |

| Gemini | Moderate | High | Strong | Cloud-based | UI + document analysis |

| Claude | Moderate | Very High | Limited | Enterprise-ready | Log & compliance review |

| Copilot | High (IDE) | Low | No | Enterprise IDE | Script writing |

| LLaMA | Customizable | Customizable | Depends | On-prem | Enterprise AI agents |

| Mistral | Moderate | Moderate | Limited | Flexible | Lightweight AI |

| Grok | Moderate | Moderate | Limited | Cloud | Research & debugging |

The Biggest Mistake QA Engineers Make with Generative AI

The most common mistake is blind trust.

Generative AI can:

- Produce incorrect locators

- Assume missing business logic

- Simplify edge cases incorrectly

- Create brittle automation scripts

QA engineers must:

- Validate AI outputs

- Structure prompts carefully

- Apply review layers

- Treat AI as augmentation, not replacement

In 2026, the competitive edge for QA professionals is not just automation skill. It is AI orchestration skill.

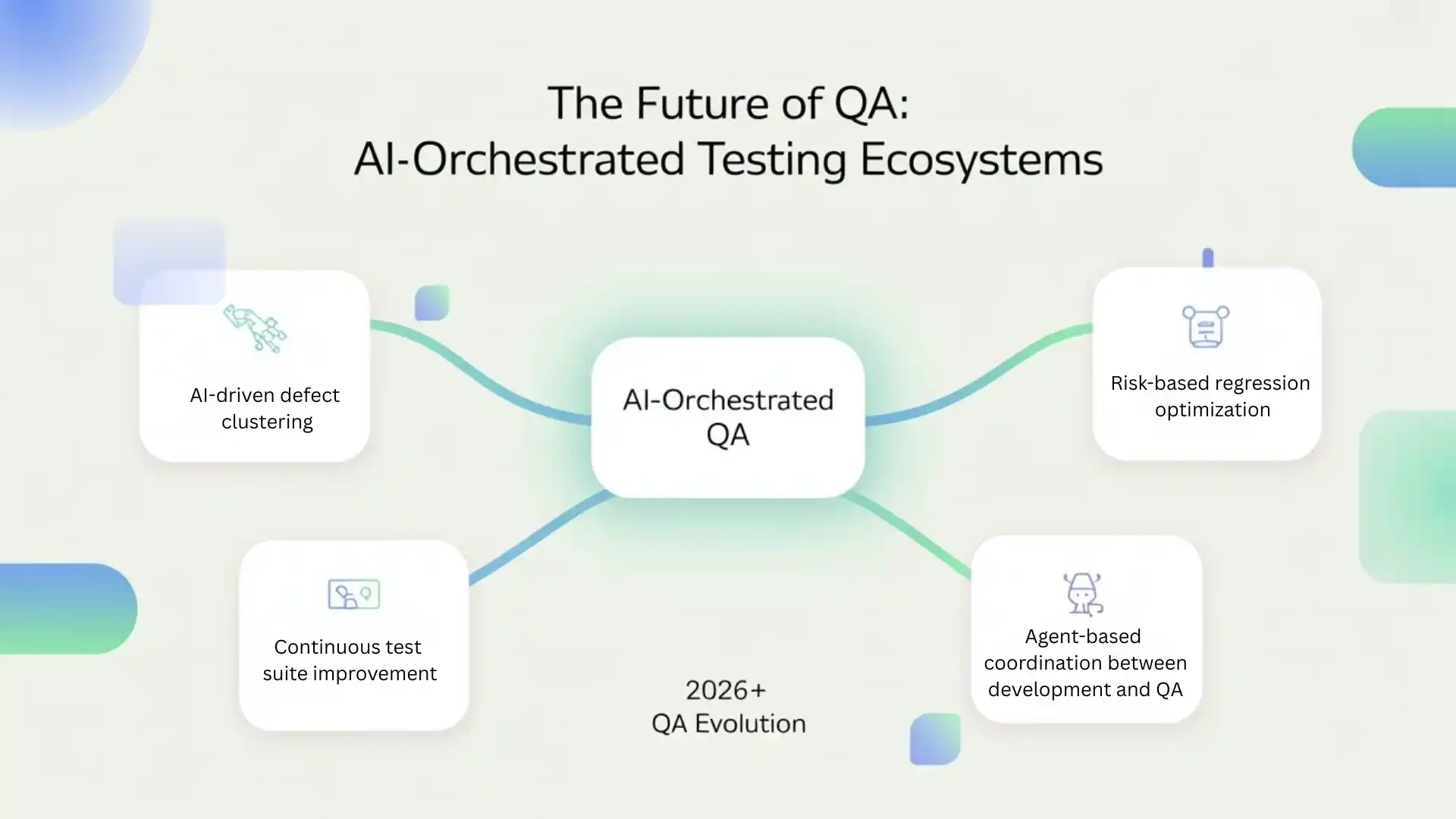

The Future of QA: AI-Orchestrated Testing Ecosystems

Testing is evolving toward:

- AI-driven defect clustering

- Risk-based regression optimization

- Autonomous test generation

- Continuous test suite improvement

- Agent-based coordination between development and QA

This shift does not eliminate QA engineers. It elevates them.

There is no single “best” model. The right choice depends on:

- Your testing stack

- Data sensitivity requirements

- Automation maturity

- Infrastructure readiness

- Risk tolerance

The real advantage lies not in choosing one model, but in understanding how to use AI responsibly and strategically.

As the industry continues to evolve, one thing is clear:

Learning AI in software testing is no longer optional—it is the fastest way to futureproof your QA career.

FAQs

Q1. Which AI model is best for QA automation in 2026?

GPT-4o/5 is widely used for automation script generation, while Gemini excels in UI validation and Claude in log analysis.

Q2. Can generative AI replace QA engineers?

No. AI assists in automation, but human validation, critical thinking, and test strategy are still essential.

Q3. How is AI used in software testing?

AI helps in test case generation, defect prediction, log analysis, test data creation, and automation optimization.

Q4. What are the risks of using AI in QA?

Hallucinated outputs, incorrect locators, missing edge cases, and over-reliance without validation

We Also Provide Training In:

- Advanced Selenium Training

- Playwright Training

- Gen AI Training

- AWS Training

- REST API Training

- Full Stack Training

- Appium Training

- DevOps Training

- JMeter Performance Training

Author’s Bio:

Content Writer at Testleaf, specializing in SEO-driven content for test automation, software development, and cybersecurity. I turn complex technical topics into clear, engaging stories that educate, inspire, and drive digital transformation.

Ezhirkadhir Raja

Content Writer – Testleaf