GenAI can accelerate test design, maintenance, and failure triage—but it also introduces new risks: hallucinated assertions, hidden flakiness, and privacy/governance gaps. The QA teams that win in 2026 won’t be “the ones using AI.” They’ll be the ones who can engineer trust: grounded context, constraints, verification gates, and observable evidence.

Why 2026 feels like a turning point

In many organizations, GenAI is no longer a “nice-to-try”—it’s being piloted or deployed across workflows. The World Quality Report 2025 says 89% of respondents are piloting or deploying GenAI-augmented workflows (37% in production, 52% in pilots).

But adoption doesn’t automatically mean scaling responsibly. The same report notes a more grounded reality: non-adopters increased to 11% (up from 4% in 2024), suggesting teams are getting serious about readiness, value, and risk—not just experimentation.

At Testleaf, we see the same pattern in the skills market: people can get GenAI to generate a test—yet struggle to make that test reliable in CI, safe with data, and useful when it fails. That’s the real shift.

The real benefits (when used like an engineering tool, not a magic wand)

GenAI has genuine upside in testing—especially where time is wasted on repetitive work and low-signal triage. For example, the State of Testing report highlights benefits like improved automation efficiency and better generation of realistic test data among top-cited advantages.

Here are the benefits that consistently hold up in practice:

1) Faster test ideation (from “requirements” to scenario coverage)

GenAI is excellent at converting:

- user stories → scenario lists

- support tickets → regression candidates

- production incidents → “never again” checks

This isn’t replacing test design—it’s making test design start from a wider coverage baseline, faster.

2) Test maintenance that reduces “framework debt”

Used correctly, GenAI can help:

- refactor repeated patterns

- standardize page objects / fixtures

- simplify brittle steps into intent-based actions

- propose better naming and structure

The value isn’t “AI wrote code.” The value is your suite becomes easier to maintain.

3) Failure triage that turns noise into signal

GenAI can summarize:

- flaky patterns across runs

- log clusters

- probable root cause areas (auth vs data vs timing vs environment)

This aligns with Gartner’s guidance that AI can improve testing activities like planning, creation, maintenance, data generation, and defect analysis.

Don’t Miss Out: playwright interview questions

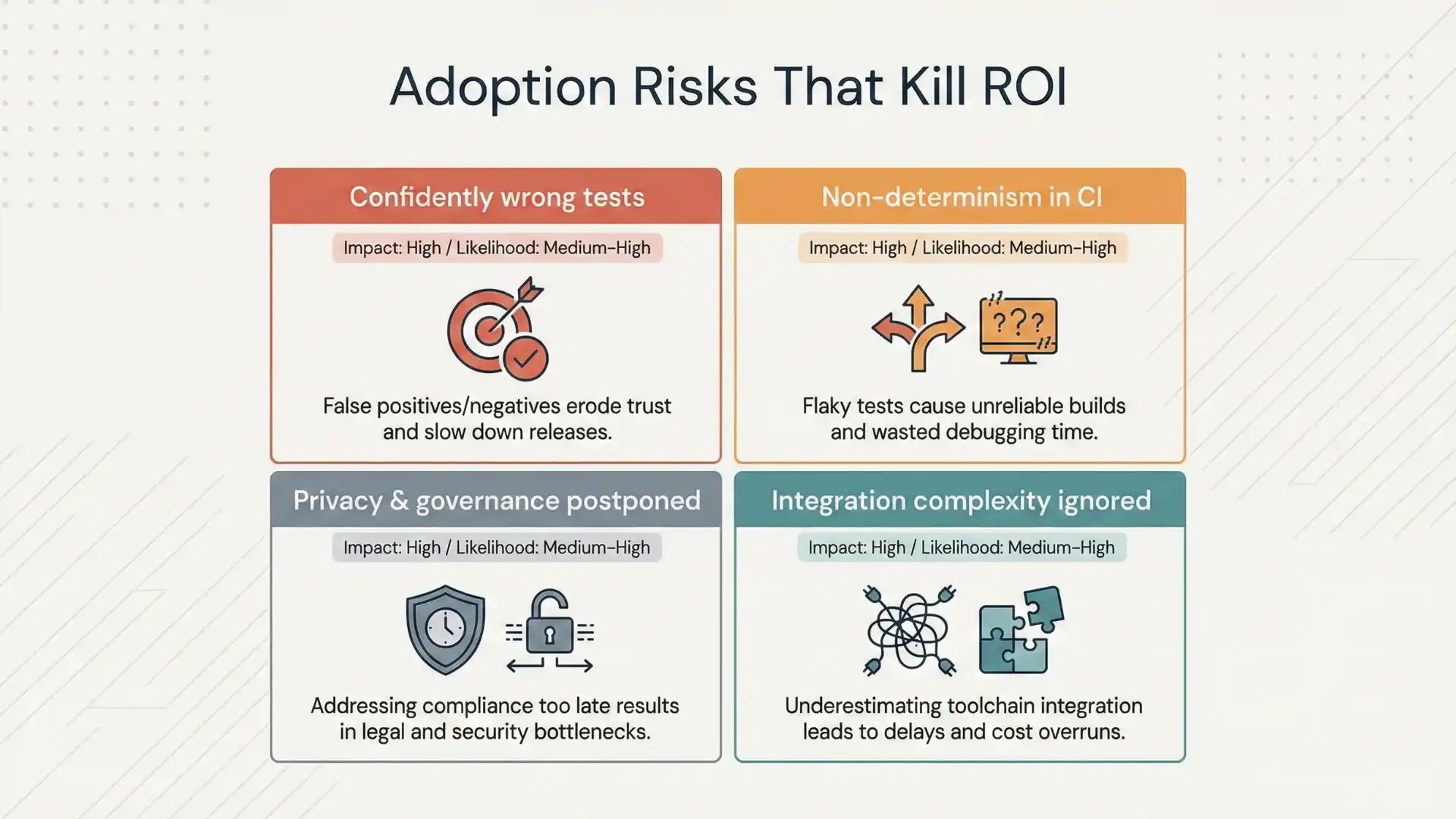

The limits that matter (the failure modes people underestimate)

If you want an evergreen view of GenAI in testing, don’t list “limitations” as generic warnings. Think in failure modes—because that’s how production incidents happen.

Failure mode A: “Confidently wrong” tests

GenAI can produce tests that:

- assert the wrong outcome

- pass while validating nothing meaningful

- use selectors/APIs that don’t exist

- look professional enough to skip review

This is not a minor issue—it’s quality theater.

Failure mode B: Non-determinism sneaks into CI

A test generated quickly becomes a long-term liability if it:

- depends on unstable UI timing

- shares state across tests

- relies on the order of execution

- changes meaning when environment changes

Failure mode C: Privacy and governance are treated as “later”

The fastest way to create risk is to paste:

- real customer data

- internal logs with secrets

- proprietary business rules

into prompts without a policy.

Many teams learn this only after someone asks, “Where did that context go?”

Failure mode D: Integration complexity is ignored

It’s easy to demonstrate GenAI in a chat window.

It’s hard to make it work across:

- repos, PR reviews, and coding standards

- CI/CD gates

- traceability requirements

- audit needs for regulated industries

Even broader AI adoption research shows a similar pattern: measurable enterprise-wide impact often lags behind “use-case benefits,” reinforcing that scaling is the hard part.

You Might Also Like: manual testing interview questions

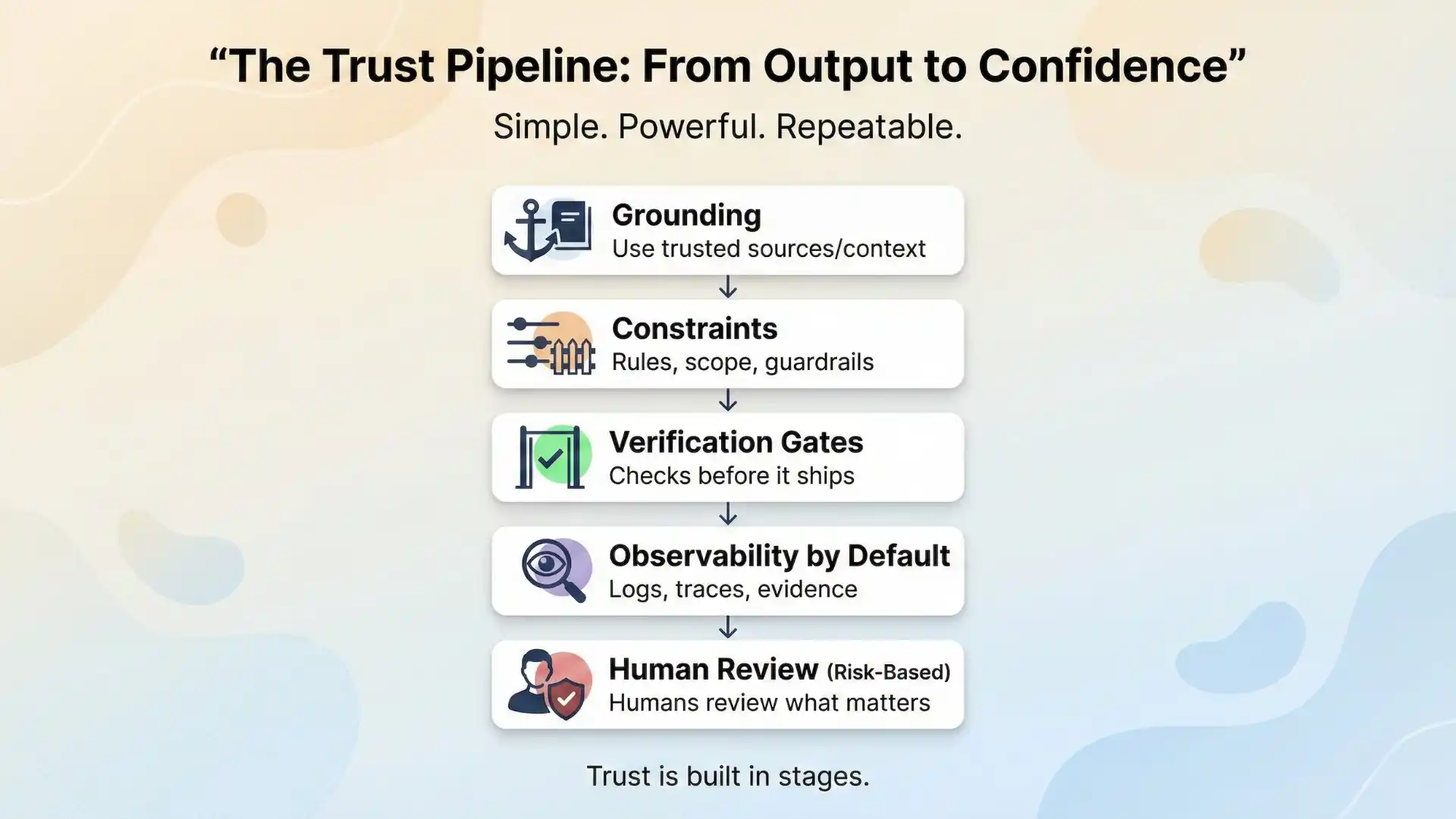

The 2026 differentiator: engineering trust (not just using GenAI)

Here’s the model we teach internally and in teams: the Trust Pipeline.

If your GenAI output can’t pass through this pipeline, it doesn’t belong in production QA.

The Trust Pipeline (simple, powerful, repeatable)

1.Grounding

Give the model correct, bounded context (DOM snapshot, API schema, acceptance criteria, known constraints).

2. Constraints

Force structured output:

- “Use role/label locators only”

- “Must include negative cases”

- “Must include explicit assertions”

- “Return in this template”

3. Verification gates

Treat GenAI output like untrusted code:

- lint/format checks

- compile/run checks

- test runs in CI

- assertion quality checks (outcome vs animation)

4. Observability by default

Every failure must produce artifacts:

- screenshot / trace

- logs

- request/response evidence

- reproduction steps

5. Human review focused on risk

Reviewers don’t check “style.” They check:

- what’s being asserted

- what could break

- what data is used

- what would cause false passes

This is how you move from “AI helps” to “AI is safe.”

Continue Reading: Epam interview questions

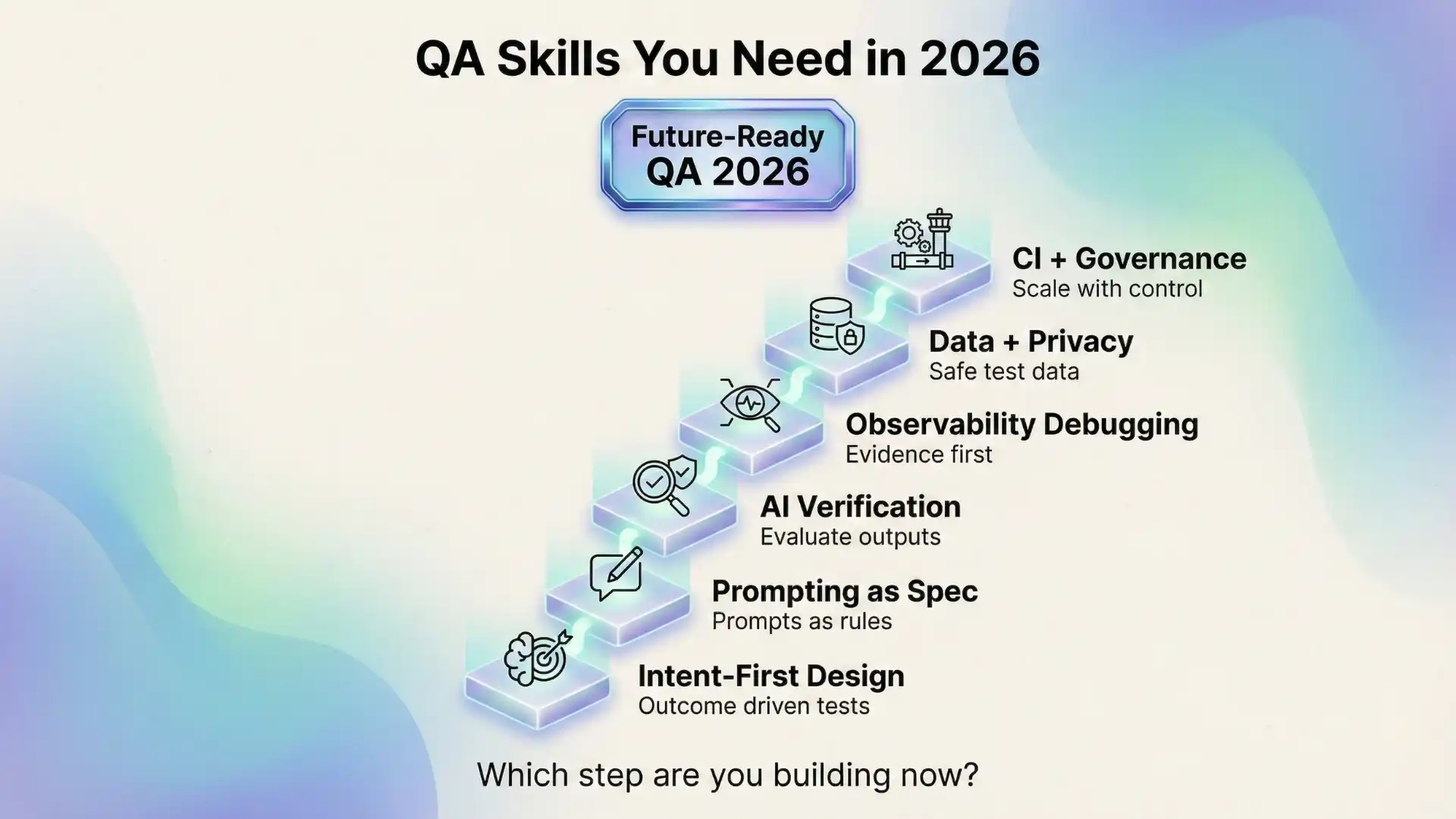

The new QA skillset for 2026 (what to learn that actually compounds)

The World Quality Report 2025–26 notes GenAI as a top-ranked skill for quality engineers (with 63% indicating it as critical).

But the real question is: what does “GenAI skill” mean in QA?

Here’s the 2026-ready skillset—defined as measurable capabilities, not buzzwords:

1) Intent-first test design

You write tests around outcomes:

- “User can pay successfully”

- “Unauthorized access is blocked”

Not around clicking sequences.

2) Prompting as specification (not chatting)

You can produce a prompt that includes:

- scope, constraints, acceptance criteria

- edge cases

- output structure

- definition of done

3) AI-output verification mindset

You can spot:

- missing assertions

- false “waits”

- weak validation (“element exists” instead of “state is correct”)

- untestable assumptions

4) Observability-first debugging

You treat failures like engineering signals:

- capture artifacts automatically

- summarize root cause patterns

- reduce reruns by improving evidence

5) Data literacy + privacy hygiene

You know what can and cannot enter prompts.

You build redaction and synthetic-data practices as defaults.

6) CI integration and governance

You can place GenAI in the SDLC responsibly:

- where it saves time

- where it’s too risky

- what approvals or gates are needed

Key takeaways

- GenAI improves testing speed and triage—but scaling safely requires trust engineering, not excitement.

- The winning QA teams build a Trust Pipeline: grounding → constraints → verification → observability → risk-based review.

- “GenAI skill” in 2026 means measurable capabilities: intent-first design, verification mindset, privacy hygiene, and CI integration.

Conclusion

GenAI is already reshaping testing—but the winners in 2026 won’t be “the teams that tried AI.” They’ll be the teams that made AI trustworthy.

At Testleaf, our focus is simple: help QA professionals build skills that hold up in production—skills that create confidence for stakeholders, clarity for engineering teams, and evidence for every release.

Because in 2026, speed matters… but trust ships.

GenAI in software testing isn’t about hype—it’s about building repeatable trust, measurable outcomes, and safer releases.

Join our webinar: AI Master Class for QA Professionals – Master AI Agents

Learn how to apply GenAI workflows and AI agents in real QA projects.

FAQs

1. What is Gen AI in software testing?

GenAI in software testing means using generative models to assist activities like test scenario creation, code refactoring, test data generation, and failure triage. The key is treating outputs as untrusted until verified with gates and evidence.

2. What are the biggest benefits of GenAI for QA teams?

Faster coverage ideation, reduced maintenance effort, better failure summarization, and improved test data generation are common benefits reported across the industry.

3. What are the biggest limitations of GenAI in testing?

Hallucinated tests, weak assertions, non-deterministic behavior, privacy risks, and difficulty integrating into CI/CD and governance workflows are major constraints.

4. Will GenAI replace software testers in 2026?

More realistically, GenAI will replace repetitive portions of testing work—and reward testers who can turn AI outputs into reliable, auditable quality signals.

5. What skills should QA learn for 2026?

Intent-first design, prompt-as-spec, verification mindset, observability-first debugging, privacy/data literacy, and CI integration are the core skills that compound.

6. How do you reduce hallucinations in AI-generated tests?

Use bounded context, strict output templates, explicit constraints, automated verification gates, and human review focused on risk—not style.

We Also Provide Training In:

- Advanced Selenium Training

- Playwright Training

- Gen AI Training

- AWS Training

- REST API Training

- Full Stack Training

- Appium Training

- DevOps Training

- JMeter Performance Training

Author’s Bio:

Content Writer at Testleaf, specializing in SEO-driven content for test automation, software development, and cybersecurity. I turn complex technical topics into clear, engaging stories that educate, inspire, and drive digital transformation.

Ezhirkadhir Raja

Content Writer – Testleaf